Multi-Stream Support in Linux V4L2: History and Current Status

Introduction

In modern camera systems, it’s common to have multiple data streams being transmitted via a single cable. A simple example of this is a MIPI CSI-2 camera sensor that sends two streams: a video stream containing the pixel frames from the sensor, and an embedded-data stream, which contains non-pixel metadata related to frames. A more complex setup might have 4 cameras, each sending two streams, which are then all multiplexed together to be transmitted over a single MIPI CSI-2 link.

Multi-stream (or just “streams”) support is not yet available in the upstream Linux kernel, but we are getting there. Most of the core features are in place, and several drivers already support multiple streams, though all of this is still hidden from userspace by a kernel compilation flag.

In this post I’ll cover the history of multi-stream support from my perspective and as I remember it, discuss the missing pieces, and show the platforms I’ve been using for development.

The Beginning

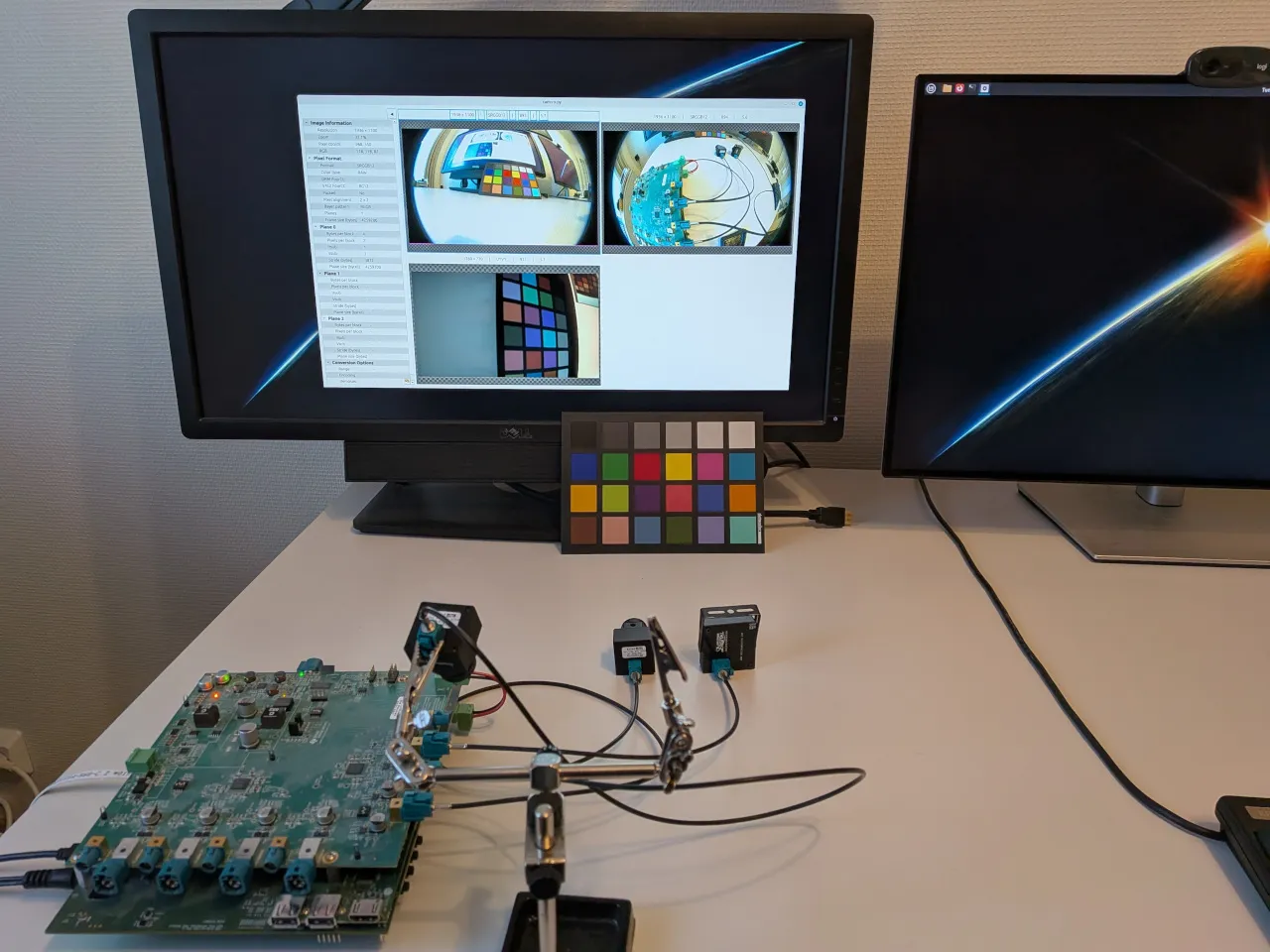

My work on multi-stream support started in early 2021. The target was to use multiple cameras via Texas Instruments’ (TI) FPD-Link III, on TI’s DRA76 platform.

FPD-Link III is a high-speed serial interface technology developed by Texas Instruments for transmitting video, control, and power over a single coaxial cable with support for much longer cable lengths than parallel or MIPI CSI-2 interfaces. FPD-Link III is commonly used in automotive and industrial camera systems and display applications.

The simplest camera FPD-Link setup contains a sensor, FPD-Link serializer, FPD-Link deserializer, and the receiver device. In this specific case we had a deserializer that supports four FPD-Link inputs, thus four sensors and serializers, with each sensor sending both a video stream and an embedded-data stream.

DRA76’s CSI-2 receiver is called “CAL”, as in Camera Abstraction Layer, which supports storing the received CSI-2 data in multiple memory buffers (up to 8) using CSI-2 datatype (DT) and virtual channel (VC) for routing decisions:

┌────────┐ ┌────────────┐

│Sensor 1│─│Serializer 1│─┐

└────────┘ └────────────┘ │ ┌─────┐

│ ┌──────────────┐ ┌─────────┐ │ DMA │

┌────────┐ ┌────────────┐ │ │ │ │ CSI-2 Rx│ ┌└─────┘

│Sensor 2│─│Serializer 2│─┼──│ Deserializer │──│ (CAL) │────│ DMA │

└────────┘ └────────────┘ │ │ │ └─────────┘ ┌└─────┘

│ └──────────────┘ │ DMA │

┌────────┐ ┌────────────┐ │ └─────┘

│Sensor 3│─│Serializer 3│─┤

└────────┘ └────────────┘ │

│

┌────────┐ ┌────────────┐ │

│Sensor 4│─│Serializer 4│─┘

└────────┘ └────────────┘Representing this pipeline with V4L2 would have required quite an inconvenient and hardcoded V4L2 pipeline structure:

- Multiple streams would have required multiple media pads, as each pad transports a single implicit stream.

- The number of media pads would have been hardcoded at driver probe time, as the number of pads cannot change dynamically.

- Routing decisions would have been hardcoded, e.g. virtual channel N would always go to pad X, as there was no routing configuration support.

So we needed something more dynamic, where the user could change the number of streams at runtime, configure the pixel formats and settings for each stream, and configure the routing.

Half of the solution was to decouple streams from pads by introducing the concept of “stream” to the API, identified by stream numbers. You could think of a “stream” in a subdevice’s pad as a “virtual pad”: instead of configuring the pixel format for pad 1, you could configure the pixel format for stream 2 on pad 1.

This method had the benefit of being backwards compatible. A subdevice without streams support would have a single stream on each pad, identified with stream number 0, so the same configuration ioctls could be used for legacy and streams-enabled subdevices.

The other half of the solution was adding the routing table for subdevices. The routing table serves two main purposes:

- It dynamically specifies the streams available

- It defines how the input streams on the subdevice’s sink pads are routed to the output streams on the source pads.

There had been some multi-stream attempts from a few different people before I started my work (at least Laurent Pinchart, Jacopo Mondi and Sakari Ailus were involved), but nothing was ever merged upstream. Luca Ceresoli had also worked on FPD-Link III drivers (not quite the same devices as I had, but close enough) and I was able to use his patches as a base.

So I wasn’t quite starting from scratch, although a lot of redesign and new design had to be done, and the solution that was later merged upstream did not much resemble the earlier versions.

I can’t say for sure when I first had a working multi-stream pipeline prototype running, but based on the dates of some emails I had sent, I would guess somewhere around March 2021.

Another thing I can’t remember is how I tested the very early versions. New ioctls were needed to configure the streams, so there were no existing userspace tools to use. But the Internet remembers, so when writing this, I found out that I had extended my kms++ project for testing streams. And indeed, I found the relevant kms++ branch. Looks like I had the core V4L2 code in C++ with Python bindings on top, and a Python app (cam.py) to control it all. The app was run on the device, and it showed the frames on an HDMI display using KMS.

Today I have pixutils, pyv4l2 and pykms that I use for testing, often streaming the frames over Ethernet to my desktop, which then processes and shows the frames.

The Early

After I had a working prototype pipeline, the work of improving the design and upstreaming features started.

As often happens, there were many things to improve and restructure in the existing code before a new big feature could be added. Looking back at the kernel’s git history, this was perhaps the first series stemming from the multi-stream work that made it upstream, merged in June 2021:

[PATCH v2 0/3] media: add vb2_queue_change_type() helper

It adds the vb2_queue_change_type() helper, which was needed because, due to the versatile nature of multi-stream, it wasn’t possible to dedicate a video node specifically for video or metadata capture, as the user could change the routing of the streams.

That was followed by the subdev-wide state support:

[PATCH v5 0/9] media: v4l2-subdev: add subdev-wide state struct

This was needed to better support the routing configuration for subdevs, but with the later additions of active state and state locking ([PATCH v8 00/10] v4l: subdev active state, around April 2022), I think the whole subdev state concept was a big improvement even for single-stream use cases.

There were also plenty of patches merged around July 2021 for CAL, where I was restructuring the driver in an effort to prepare it for easier adaptation to multi-stream support: [PATCH v4 00/35] media: ti-vpe: cal: improvements towards multistream.

The Middle

The work continued, adding V4L2 features needed for multi-stream support and implementing streams support for the device drivers in my setup. Smaller miscellaneous patches were merged during 2022, along with the important subdev active state series mentioned above.

In January 2023, after 16 iterations, a milestone series was merged:

[PATCH v16 00/20] v4l: routing and streams support

This series added the core infrastructure for multi-stream support, including routing ioctls, stream IDs and subdev operations to enable/disable individual streams. The patch that added the flag to hide the multi-stream userspace APIs was also merged in this series:

8a54644571fe media: subdev: Require code change to enable [GS]_ROUTING

This was necessary because we were adding userspace-visible features, like the new G_ROUTING and S_ROUTING ioctls and the stream IDs. While capturing multiple streams was working fine on my local branch with my development environment, there was still a lot to do and we weren’t sure if the userspace API would have to be changed. And the flag turned out to be a good thing, as the userspace API had to be changed later.

Note that the flag is only hiding specific features from userspace. The kernel drivers can use all the features available for streams. In other words, a driver could be fully streams-enabled, but with the features hidden from userspace, it would only see a single stream from that device.

Hint: If you want to enable streams support in your kernel, have a look at the

commit, and revert it or set the v4l2_subdev_enable_streams_api variable.

In July 2023, the FPD-Link III drivers, along with I2C ATR support, were merged:

[PATCH v15 0/8] i2c-atr and FPDLink

The FPD-Link drivers were fully streams-enabled from the start and, if I’m not mistaken, they were the first drivers upstream that had full streams support. Along with the FPD-Link drivers, the I2C Address Translator feature was merged, which allowed addressing the remote devices (i.e. the cameras) via I2C.

We (mostly me, Laurent, and Sakari) kept gradually adding more features and improving the existing ones. The next clear milestone was merged in October 2024:

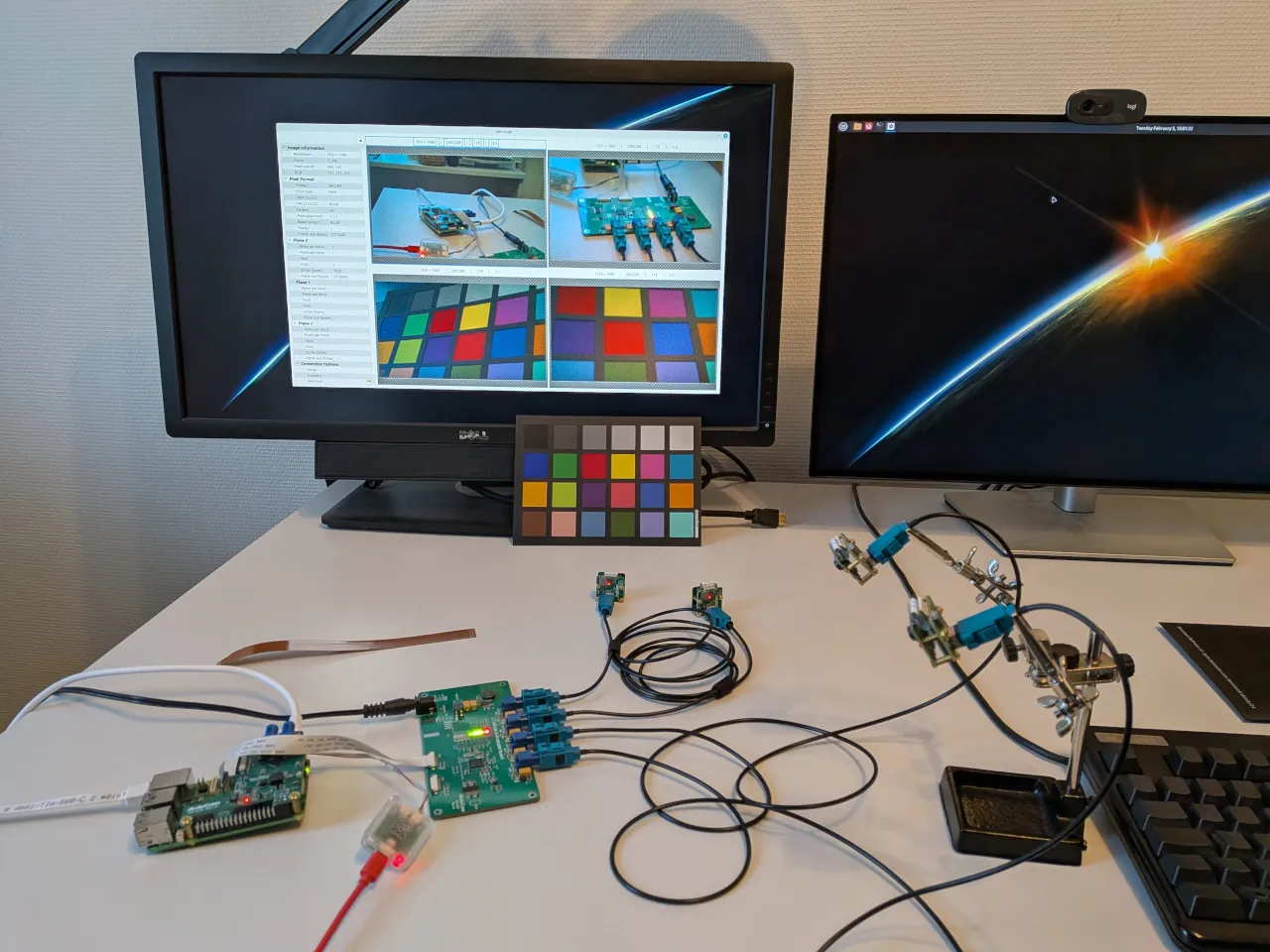

[PATCH v6 0/4] media: raspberrypi: Support RPi5’s CFE

The Raspberry Pi 5 CFE driver was (again, if I’m not mistaken) the first V4L2 platform driver that had full streams support. While the CAL driver had had streams support for much longer on my local branch, for a few reasons the focus had moved to Raspberry Pi CFE development and it got upstream first.

But the CAL driver wasn’t that far behind. In April 2025, CAL streams support was finally merged, and it was just a single commit, as the groundwork had been done properly earlier:

[PATCH v7 0/3] media: ti: cal: Add streams support

Current Status

As mentioned, as of today in February 2026, the multi-stream support in upstream is still behind that kernel compilation flag. But we have multiple platform drivers and device drivers supporting streams, so what is missing?

Sakari has been working on a series that does the final finishing touches, and also removes the enable-streams kernel flag, thus removing the experimental status of streams:

[PATCH v11 00/66] Generic line based metadata support, internal pads

It’s a big one, containing patches for a wide range of topics, and will probably be broken into a bit smaller pieces.

It adds a new interesting concept, internal pads. Internal pads are sink pads that represent internal sources. For example, a sensor subdevice could have two internal pads, one producing the video stream, one producing the embedded data stream.

Note the somewhat interesting terms here, “sink pads that represent sources”… A normal sink pad is connected to a source pad on another subdevice, and thus the subdevice in question receives data via that sink pad. You can think of the internal pads as sink pads that are implicitly connected to an invisible internal source, like the sensor pixel array. Thus the subdevice receives data via the internal pads.

Technically an internal pad could have multiple streams, but the convention is to use a single pad for a single source, thus the sensor mentioned above would have two internal pads, each with a single stream, instead of a single internal pad with two streams.

The subdevice routing table can be used to enable or disable the streams from the internal pads, and having the internal pads allows us more precise control over e.g. the pixel format: the video stream on the internal pad can have the sensor’s native resolution and pixel format, whereas the video stream on the subdevice’s source pad can have the output resolution and format. Or, in the case of embedded data, the format on the internal pad can be a sensor specific format specifying exactly what the content will be, whereas the format on the source pad can be a more generic container format, allowing the transport of the embedded stream via other subdevices without the other subdevices having to understand the camera’s internal embedded data format.

While not kernel related, we also need to consider the userspace to really say that multi-stream is now done. What use is the streams support if no one uses it? This brings in libcamera. There has been some work done in libcamera to support streams, but the feature should still be considered a work in progress, as the kernel side is not finished.

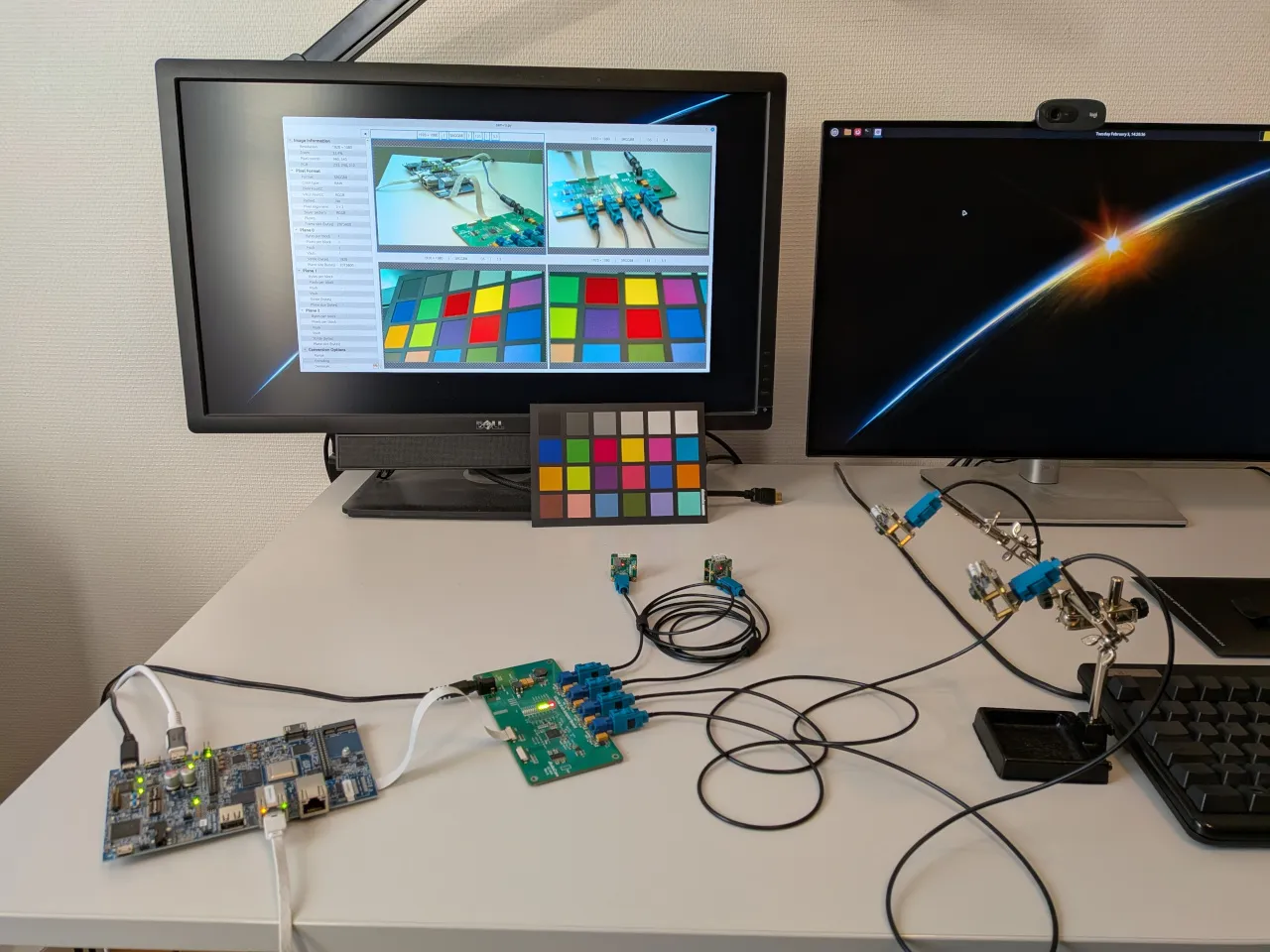

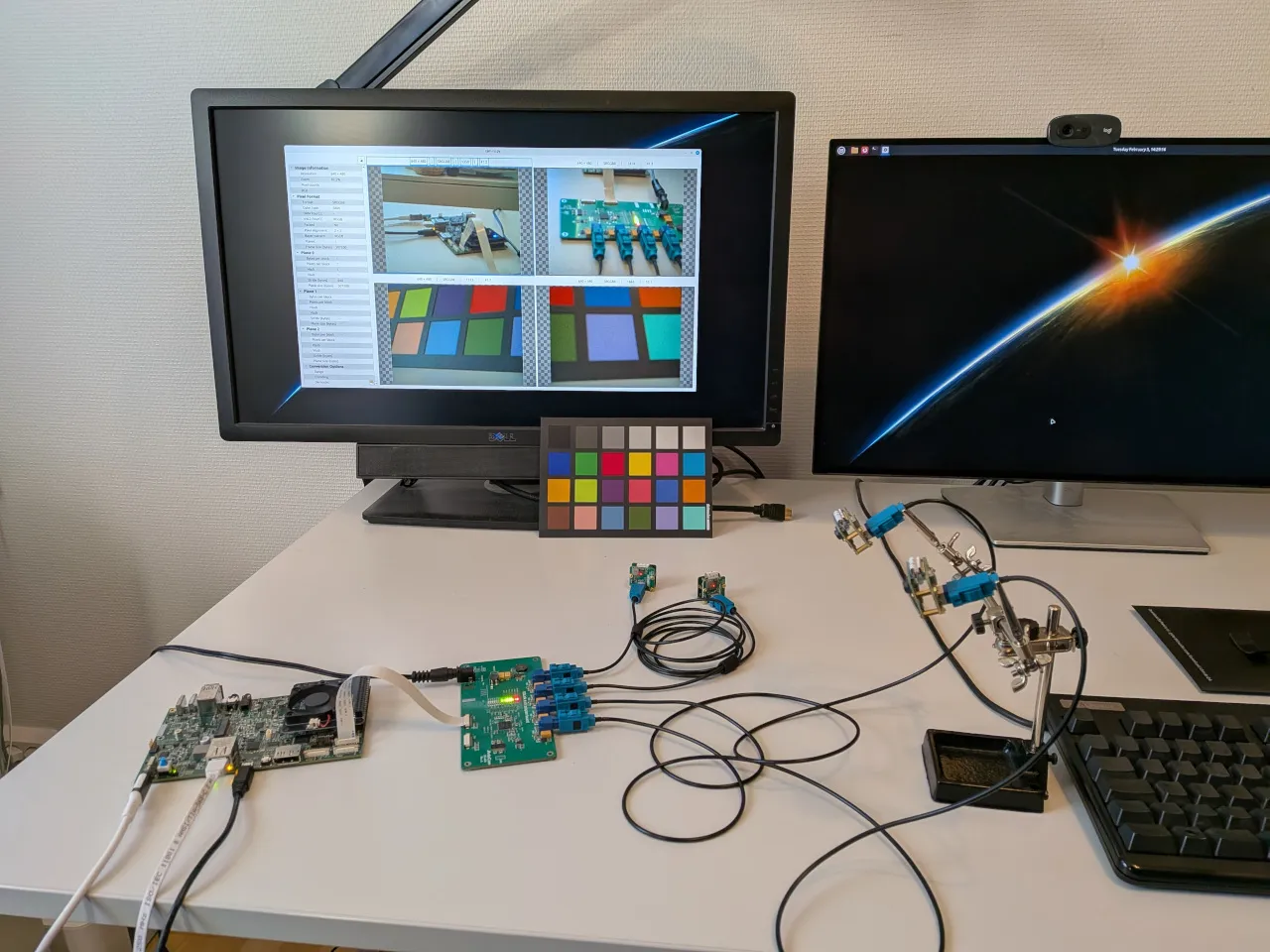

More Platforms and Devices

The two platforms mentioned earlier, TI DRA76 (or more broadly, TI’s older 32-bit platforms with CAL) and Raspberry Pi 5, have upstream streams support and can be used with FPD-Link with a relatively small amount of extra patches on top of upstream. And now that the core infrastructure for streams is upstream, more vendors are working on adding multi-stream support to their platforms.

TI’s 64-bit K3 platforms will soon have streams support merged: [PATCH v10 00/18] media: cadence,ti: CSI2RX Multistream Support

This enables streams on many boards based on TI K3 SoCs, e.g. AM62A SK from TI, BeagleBone AI-64 from Beagleboard, and AM69 Aquila from Toradex.

Also, as more and more vendors have started using Raspberry Pi compatible 4-lane CSI-2 connector on their boards, the same cameras and deserializer boards work interchangeably between them.

After TI, Renesas will most likely be the next platform to support streams as the series is nearing completion: [PATCH v4 00/15] media: rcar: Streams support

We are also seeing more subdevice drivers adding streams, for example [PATCH v7 00/24] media: i2c: add Maxim GMSL2/3 serializer and deserializer drivers which adds GMSL 2/3 serializers and deserializers.

Conclusion

It has been a long road, five years from the start, and we are not quite there yet. This is often the reality of upstream kernel development for complex changes, as a lot of care has to be taken to not introduce regressions, not crash the kernel, keep the userspace ABI compatibility, and design the new userspace ABIs so that you are as certain as you can be that they will cover future changes and features too.

Add to that the always changing business priorities, and it can take a long time to get a feature upstream, even if you already have it running on your local branch.

But this is the first time I have felt the streams support is actually nearing the completion. We have all the pieces, now we just need to push to add the final polish and merge.

Many companies, including Texas Instruments, Intel, Raspberry Pi, Renesas, and, of course, Ideas on Board, have been involved in the work: people discussing the design, doing the development, testing and reviewing patches. Especially Laurent Pinchart and Sakari Ailus have been key persons in the design and implementation. This has truly been a community effort, and it’s great to see it all coming together and being able to say “yes, Linux supports multi-stream capture!”.